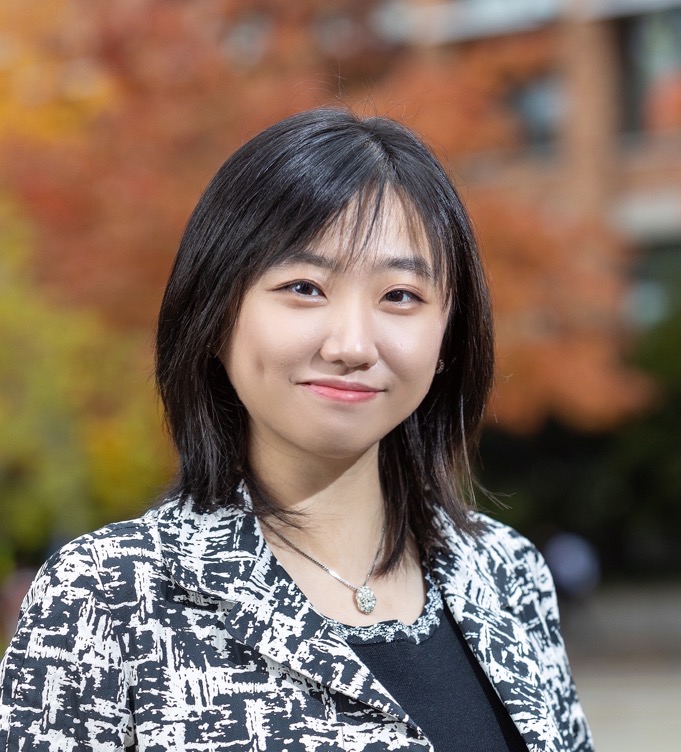

Panelist: Ariel Procaccia

Harvard

Democracy and the Pursuit of Randomness

Abstract

Sortition is a storied paradigm of democracy built on the idea of choosing representatives through lotteries instead of elections. In recent years this idea has found renewed popularity in the form of citizens’ assemblies, which bring together randomly selected people from all walks of life to discuss key questions and deliver policy recommendations. A principled approach to sortition, however, must resolve the tension between two competing requirements: that the demographic composition of citizens’ assemblies reflect the general population and that every person be given a fair chance (literally) to participate. I will describe our work on designing, analyzing and implementing randomized participant selection algorithms that balance these two requirements. I will also discuss practical challenges in sortition based on experience with the adoption and deployment of our open-source system, Panelot.

Biography

Ariel Procaccia is Gordon McKay Professor of Computer Science at Harvard University. He works on a broad and dynamic set of problems related to AI, algorithms, economics, and society. He has helped create systems and platforms that are widely used to solve everyday fair division problems, resettle refugees, mitigate bias in peer review and select citizens’ assemblies. To make his research accessible to the public, he regularly writes opinion and exposition pieces for publications such as the Washington Post, Bloomberg, Wired and Scientific American. His distinctions include the Social Choice and Welfare Prize (2020), Guggenheim Fellowship (2018), IJCAI Computers and Thought Award (2015) and Sloan Research Fellowship (2015).